At GOStack, we recently kicked off a project that doesn’t come around very often: migrating a client’s data platform from Google Cloud Platform (GCP) to Amazon Web Services (AWS). As the title suggests, this is the first post in a series documenting that journey. In part one, we’ll share why we’re migrating now, what success looks like, and how core GCP services map to AWS.

Most data migrations happen because a platform is outdated or can’t keep up anymore. But in this case, the client already had a modern setup on GCP — BigQuery, DataProc, Dataflow, Pub/Sub, Cloud Functions, and just a handful of on-prem systems. It is working well enough, but the architecture has grown complex and harder to maintain. It isn’t broken, but it also isn’t as streamlined or future-ready as it should be.

So why move?

The first obvious reason – because most of the client’s production applications and infrastructure now live in AWS (recently migrated by GoStack). All of the new DevOps stack is already running there, which means data have to keep moving back and forth between clouds. Keeping the data platform isolated in GCP while everything else runs in AWS creates friction: two clouds to secure, duplicated CI/CD pipelines, fragmented monitoring, and more operational overhead than necessary.

Many services in the current setup are due for an upgrade. Over time, different teams have built and maintained two parallel data platforms, which adds to the complexity and operational overhead.

Since we got involved, we are starting to look at the whole architecture as a single system — and the migration is the opportunity to consolidate, modernize, and retire legacy components along the way. It is also our chance to unify the data platform into a Lakehouse architecture — an approach that didn’t even exist when the original system was first built. With AWS’s strong investment in Iceberg support in recent years, it now stands out as a natural fit for this modernization.

Another big challenge is the lack of agility for the data team. Because so much effort goes into maintaining and fixing the legacy platform, there is less time to explore new opportunities that could bring value to the company. This is especially limiting now, with the rapid growth of AI and advanced analytics — areas where the business wants to move faster but is held back by technical debt.

That gives us the rare chance to re-imagine a working data platform — not because it is broken, but because aligning it with the rest of the ecosystem unlocks new possibilities. And modernizing along the way is never wasted effort: it’s an investment that keeps the client ready for what comes next — including supporting new AI and advanced analytics initiatives.

It’s not just a lift-and-shift. It’s a chance to rebuild the platform on stronger foundations and prepare it for the future.

What success looks like

Defining success in a project like this is tricky. On the surface, it’s easy to say: success means we’ve moved everything from GCP to AWS. But that would miss the bigger picture.

For us and our client, success is about more than just completing the migration. It’s about bringing the whole technology landscape together on AWS — applications, infrastructure, and data — so everything works in one place.

The data platform is a big part of that. It’s not only the foundation for analytics and decision-making, but also a core piece of the company’s overall tech stack. Having it in the same cloud as applications and infrastructure makes life easier for everyone — from DevOps and platform engineers to support teams — because they no longer have to juggle different security models, tools, or monitoring systems.

Concretely, here’s what that means

- Keep overhead low: Less fragmentation, fewer tools to maintain, and one cloud environment instead of two.

- Save costs where possible: More transparency, easier optimization, and less duplication across teams.

- Prefer managed services: Leaning on AWS offerings instead of building custom apps makes the platform easier to operate and evolve.

- Build for the future: Real-time data, machine learning, advanced analytics — the new setup should make these opportunities easier to adopt.

In short, success means the client comes out of this journey not just with their data moved, but with a modernized tech infrastructure that is cleaner, easier to run, and ready for whatever comes next.

Choosing technology stack

Once we knew the client’s data platform was moving to AWS, the next question was: what should the new stack look like?

We could have gone straight for an AWS-native solution and called it a day. But migrations like this are also a rare chance to take a step back, look at the market, and ask: is there a better way to modernize the platform?

That meant exploring not just AWS-native services, but also other strong contenders like Snowflake, Databricks, and ClickHouse — all of which can run on AWS. Each of them has its own strengths, trade-offs, and sweet spots.

At the same time, we wanted to keep the bigger picture in mind: building on open standards where possible, so the client isn’t locked into a single vendor or cloud. Open storage formats, modular compute, and cloud-agnostic architectures give the flexibility to evolve in the future, without having to re-platform again.

What follows is a quick overview of the main choices we evaluated and how they stack up against each other.

AWS Native (Redshift + Athena on Iceberg + Glue/Lake Formation)

Our first stop was the obvious one: the AWS-native ecosystem. The appeal here is tight integration with everything else already running in AWS. Using Iceberg for open table formats gives us schema evolution and open storage, while Redshift can handle BI concurrency at scale. Athena adds a flexible, serverless SQL layer on top. Governance is well covered through Glue and Lake Formation, which makes it easier to centralize security and manage tiered access.

In many ways, this feels like the most natural fit if the goal is alignment and simplicity. The trade-off, of course, is that it ties us deeply into the AWS way of doing things.

Snowflake

We also looked closely at Snowflake, which in many ways feels like the closest cousin to BigQuery. It brings the same simplicity and elasticity — you scale up and down without thinking too much about infrastructure. For BI and reporting use cases, it’s smooth, with very little operational overhead.

The downside? Snowflake remains a largely closed ecosystem. While it does support external Iceberg tables, Iceberg isn’t a first-class storage layer in Snowflake — it’s more of an integration feature than a foundation. That makes it less attractive if the goal is to build an open Lakehouse architecture where multiple engines can operate on the same data. Snowflake is great for simplicity, but less ideal if long-term flexibility and openness are priorities.

Databricks

Then there’s Databricks — a heavyweight when it comes to advanced data engineering and ML. With Delta Lake, you get ACID guarantees, schema evolution, and streaming baked in. It supports SQL-first BI as well as more complex data science workflows.

The trade-off is that Databricks has a strong focus on Spark as the core execution engine. That’s powerful for advanced use cases, but it also means leaning heavily on one engine for most workloads. For this migration, we want to keep the platform simple and avoid binding everything to Spark, especially since many of the client’s current needs are SQL-driven pipelines and BI reporting that don’t require Spark-level complexity.

There’s also the issue of cost. From what we’ve seen, Databricks is typically the most expensive option — not just in licensing and compute, but also in the engineering effort required to operate it well. Running clusters efficiently, managing governance, and keeping costs under control demands deeper specialization, which adds overhead compared to AWS-native services or Snowflake.

Databricks is still compelling if the roadmap leans heavily into ML and advanced data engineering, but given the current priorities, it introduces more complexity and cost than the client — or our team — needs right now.

ClickHouse

Finally, we explored ClickHouse. This isn’t a general-purpose data warehouse, but when it comes to low-latency OLAP and event analytics, it’s lightning fast. Real-time dashboards and time-series queries absolutely fly.

That said, it’s not designed to be the foundation of a company-wide data platform or semantic layer. Instead, it shines as a specialized engine alongside other components. We saw it as more of a complement to a core warehouse rather than the centerpiece of the stack.

Wrapping up the evaluation

After weighing the options, we kept coming back to the same conclusion: while Snowflake and Databricks bring a lot to the table — and ClickHouse shines in specific niches — the AWS-native stack was the best fit for this client.

Why? Because as mentioned in the introduction, the broader company infrastructure is already in AWS, and going native keeps everything more coherent and easier to operate. At the same time, by leaning on open formats like Iceberg, we still get the flexibility to evolve and avoid heavy vendor lock-in.

That decision set the direction for the rest of the migration: instead of re-platforming around Snowflake or Databricks, we’d rebuild the data platform natively on AWS, mapping each GCP component to its closest AWS equivalent — and modernizing along the way.

Transition risks and what we’ve learned

A data platform switchover is rarely a big-bang event. Moving everything at once would create too much risk — bigger blast radius, harder recovery, and little room to validate. Instead, the migration is staged, with coexistence windows, reversible checkpoints, and parity tests along the way.

One important lesson so far is the need to stay downstream compatible during the transition. In practice, that sometimes means running a compatibility layer — even copying data back into GCP — so dependent teams can keep their processes running while they adapt. It’s not glamorous, but it’s critical for making the cutover safe.

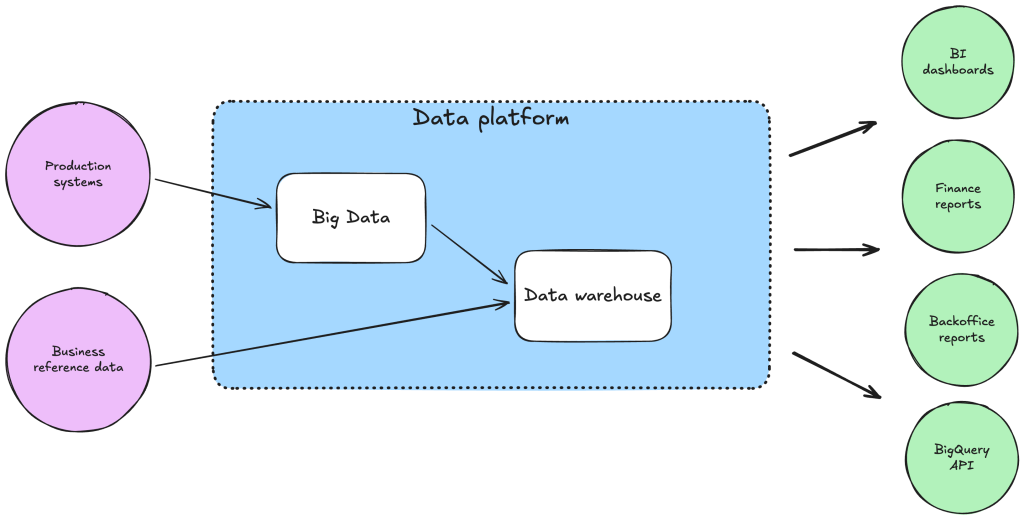

It’s also worth stressing that the data platform is more than just BigQuery. It’s the full ecosystem — streaming (Pub/Sub), orchestration, batch compute (Dataproc), transformations, governance, and monitoring. Migrating it means modernizing across all those dimensions, not just swapping out one service.

Apache Iceberg sits at the center of the new AWS design, keeping storage open and query engines interchangeable. Governance has to be baked in from day one, with Lake Formation enforcing fine-grained access. For orchestration, the client will transition from Airflow to Argo Workflows, consolidating pipelines on Kubernetes. Smaller event-driven tasks will be handled by Lambda and Glue.

In short: This isn’t just a one-to-one service swap. It’s about carefully moving a complex ecosystem, keeping the business running, and building a platform that’s more flexible and future-proof than before.

What’s next

The migration journey doesn’t end with service mapping. Ahead of us is the work of standing up the lakehouse — defining layered zones (raw, curated, analytics), wiring in first ingestion paths into Iceberg, and building out metadata and observability. Orchestration will be folded into the client’s existing DevOps workflows, and datasets and dashboards will move gradually, with contract tests and cutover checkpoints to keep things safe.

The goal isn’t a literal lift-and-shift, but a modern, trustworthy platform: open storage, flexible compute, stronger governance, and tighter alignment with the application stack.

In the next blog part, we’ll focus on the first big step of the migration: data ingestion into AWS — how we’re planning to land data streams reliably into Iceberg on S3, and the patterns we’re putting in place to make the rest of the journey smoother.